Regarding SEO, every detail makes a difference—and one of the most ignored yet powerful tools in your optimization is the `robots.txt` file. This file serves as a guard for search engine crawlers, directing them to the pages that should be accepted and those that should be ignored. While it may seem technical or insignificant initially, a well-structured file can greatly enhance your website’s visibility, crawl efficiency, and overall search engine performance. In this article, we’ll explore how to use it effectively to gain better control over your site’s presence on Google—and why it’s an essential element in every successful SEO strategy.

What is the robots.txt file?

It is a simple text file included in the source file of most websites to communicate with crawlers or bots. For example, yourwebsite.com/robots.txt. This file provides commands about the website pages that site robots are permitted to visit. A bot is an automated software application that engages with websites and applications. There exist beneficial bots and detrimental bots; one category of beneficial bots is crawler bots, which are tasked with traversing web pages and indexing content. To improve its visibility in search engine rankings. This file’s function is to manage the activity of these web crawlers to avoid overloading the web server hosting the website or indexing pages that are not intended for public viewing.

Importance of robots.txt for SEO:

A properly designed file improves SEO and website visibility in several ways:

- Management of crawling priorities: Give bots instructions to ignore unnecessary pages and concentrate on important content.

- Maximize the use of sitemaps: Point crawlers to the sitemap to assure effective indexing of important directories.

- Preserve server resources: Minimize pointless bot activity to avoid causing an excessive amount of burden on HTTP requests.

- Safeguard private documents: prevent crawlers from accessing or indexing private files.

- Improve your SEO approach: Improve website exposure by concentrating on the right regions and supporting better crawl budget allocation.

How does a Robots.txt file work?

Housed on a web server, this file is a text file free of HTML markup. Type the homepage URL to access it, then add /robots.txt. Although it is not connected to any other site, most crawlers seek it before moving on. These files cannot enforce the instructions they give bots, but they do provide them. While a bad bot will ignore or handle it to find forbidden web pages, a good bot will visit it first before looking over other pages.

How to create and structure a robots.txt file?

Robots.txt files use a group of different protocols. Protocol is a format for providing instructions. The main protocol is called the exclusion protocol, as it is responsible for telling bots which web pages to avoid. The other protocol is the sitemap protocol,,which is considered a robot inclusion protocol, as it shows crawlers which pages they can crawl.

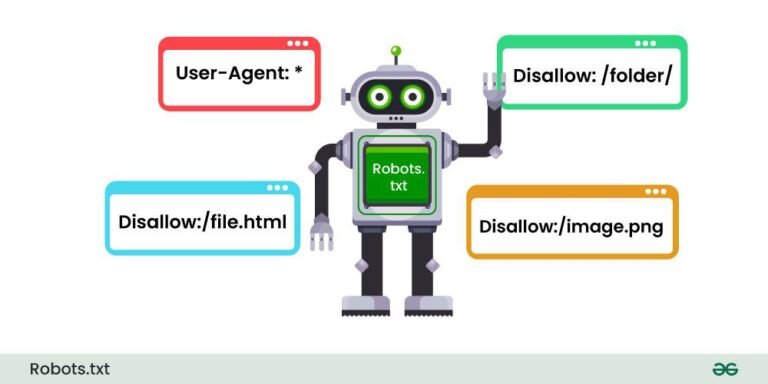

Robots.txt primarily recognizes four main directives:

- User-agent: Defines who, meaning a group of bots or an individual bot, the rules that follow are for.

- Disallow: identifies the resources, files, or directories that the user-agent is not allowed to access.

- Allow: Can be used to set up exceptions, e.g., to allow access to individual folders or resources in otherwise forbidden directories.

- Sitemap: Points bots to the URL location of a website’s sitemap.

Basic format:

- User-agent: [user-agent name].

- Disallow: [URL string not to be crawled].

What is the user agent?

Every person or program that uses the Internet will have a “user agent. For human users, this includes details like the operating system version and browser type, but excludes any personal information. It helps websites show content that’s suitable for the user’s system.

For bots, the user agent assists website managers in identifying the type of bots crawling the site.

In a robots.txt file, a website manager can provide specific instructions for specific bots by writing different instructions for bot user agents.

Common search engine bot user agent names include:

Google:

- Googlebot.

- Googlebot-Image (for images).

- Googlebot-News (for news).

- Googlebot-Video (for video).

Bing:

- Bingbot.

- MSNBot-Media (for images and video).

Baidu:

- Baiduspider.

How do ‘Disallow’ commands work in a robots.txt file?

The Disallow command is a common robot exclusion protocol that prevents bots from accessing web pages or sets of web pages that follow it. These pages are not hidden but are not useful for average users.

- Block one web page: Following the “disallow” command, the portion of the URL that follows the homepage is included. With this command in place, good bots will be denied access. The page will not appear in search engine results.

- Block one directory: Blocking multiple pages simultaneously can be more efficient، than listing them individually. A robots.txt file can block the directory containing it if they are in the same section.

- Allow full access: This informs bots that nothing is prohibited, so they can explore the entire website.

- Hide the entire website from bots: The “/” command, which represents the “root” in a website’s hierarchy, prevents search engine bots from crawling the website, effectively eliminating it. it from the searchable Internet.

What is the Sitemaps protocol?

The Sitemaps protocol helps bots in crawling websites by providing a machine-readable list of all pages. Links to these sitemaps can be included in the robots.txt file, formatted “Sitemaps:” followed by the XML file’s web address. Sitemaps do not force crawler bots to prioritize web pages differently.

Common mistakes should be avoided:

There are the most common errors associated with robots.txt that should be avoided on your website:

The root directory does not contain robots.txt:

Search robots can only find a file in the root folder of your website if it’s in a forward slash between the .com domain and the filename. If there’s a subfolder, move the file to the root directory, requiring root access to your server.

Wildcards are not used well:

Robots.txt supports two wildcard characters:

1- Asterisk (*): represents all instances of a valid character.

2- Dollar sign ($): represents the end of a URL, allowing you to apply rules solely to the final part of the URL.

- It’s sensible to adopt a minimalist approach to using wildcards, as they have the potential to apply restrictions to a much broader portion of your website.

- It’s also relatively easy to end up blocking robot access from your entire site with a poorly placed asterisk.

- Test your wildcard rules using a robots.txt testing tool to ensure they behave as expected.

- Be cautious with wildcard usage to prevent accidentally blocking or allowing too much.

Stylesheets and scripts are blocked:

Blocking crawler access to external JavaScripts and CSS can cause Googlebot issues. To fix this, remove the blocking line from your robots.txt file or insert an exception to restore access to the necessary CSS and JavaScript files.

No URL for the sitemap:

Include your XML sitemap URL in your robots.txt file for SEO benefits. This gives Googlebot a head start in understanding your site’s structure and main pages. While not strictly an error, it’s essential to boost SEO efforts.

Access To Development Sites:

To prevent crawlers from crawling and indexing pages under development, add a disallow instruction to the file of a website and remove it when launching a completed one. This is crucial for proper crawling and indexing. If the development site receives real-world traffic or is not performing well in search, check for a universal user agent disallow rule in the robots.txt file.

Making use of absolute URLs:

Absolute URLs are best practice in canonical and hreflang, but relative paths are recommended. Google’s robots.txt documentation outlines this. Absolute URLs don’t guarantee crawlers will interpret them as intended.

Unsupported and deprecated components:

The guidelines include crawl-delay and no-index, which are not supported by Google. Google removed the ability to set crawl settings in the Search Console in 2023. Before this, webmasters could use noindex using on-page robots or x-robots measures at a page level.

Finally, the robots.txt file significantly impacts search engine interaction with your website, even with a few lines of code. Understanding and adjusting crawl directives is crucial for a successful SEO strategy, regardless of your eCommerce platform size.

Learn how to optimize your website’s search visibility with a well-structured robots.txt file. Discover SEOKhana, your trusted SEO partner.